- How to install spark on ubuntu update#

- How to install spark on ubuntu zip#

- How to install spark on ubuntu download#

Click Here Unzip the downloaded tar file.

How to install spark on ubuntu download#

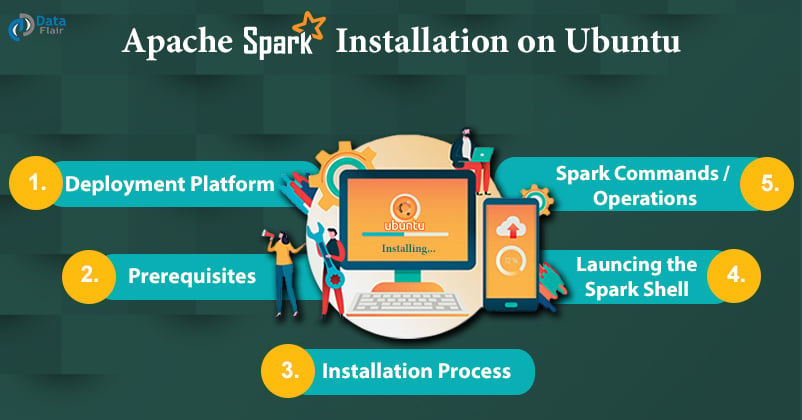

In this Spark Tutorial, we have gone through a step by step process to make environment ready for Spark Installation, and the installation of Apache Spark itself. Spark Installation Download the Apache Spark tar file. :quit command exits you from scala script of spark-shell.

How to install spark on ubuntu update#

sudo apt-get update sudo apt-get upgrade sudo apt-get install openjdk-8-jdk. We will also install SSH server to allow us to SSH into our Ubuntu. Spark 2.3 requires Java 8, that is where we will begin.

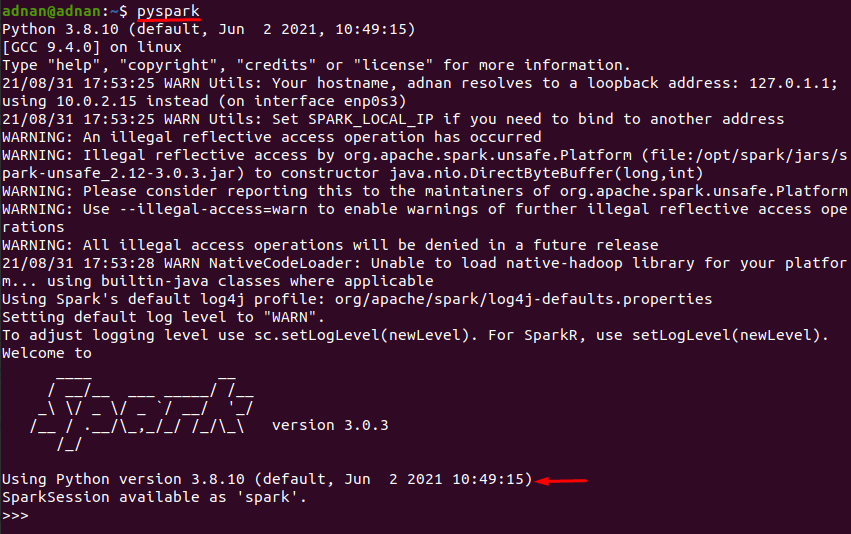

Scala> verify the versions of Spark, Java and Scala displayed during the start of spark-shell. Open up your Ubuntu Terminal and follow the following steps. Type in expressions to have them evaluated. Using Scala version 2.11.8 (OpenJDK 64-Bit Server VM, Java 1.8.0_131) Spark context available as 'sc' (master = local, app id = local-1501798344680). using builtin-java classes where applicableġ7/08/04 03:42:23 WARN Utils: Your hostname, arjun-VPCEH26EN resolves to a loopback address: 127.0.1.1 using 192.168.1.100 instead (on interface wlp7s0)ġ7/08/04 03:42:23 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another addressġ7/08/04 03:42:36 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException In order for Spark to run on a local machine or in a cluster, a minimum version of Java 6 is required for installation. For SparkR, use setLogLevel(newLevel).ġ7/08/04 03:42:23 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform. To adjust logging level use sc.setLogLevel(newLevel). Using Sparks default log4j profile: org/apache/spark/log4j-defaults.properties

Run the following command : ~$ spark-shell ~$ spark-shell To verify the installation, close the Terminal already opened, and open a new Terminal again. Now that we have installed everything required and setup the PATH, we shall verify if Apache Spark has been installed correctly. Latest Apache Spark is successfully installed in your Ubuntu 16. This tutorial has used /DeZyre directory Change. Alternatively, you can use the wget command to download the file directly in the terminal. 3.1.1) at the time of writing this article. export JAVA_HOME=/usr/lib/jvm/default-java/jre Step-by-Step Tutorial for Apache Spark Installation Change to the directory where you wish to install java. Install Apache Spark in Ubuntu Now go to the official Apache Spark download page and grab the latest version (i.e. We shall use nano editor here : $ sudo nano ~/.bashrcĪnd add following lines at the end of ~/.bashrc file. To set JAVA_HOME variable and add /usr/lib/spark/bin folder to PATH, open ~/.bashrc with any of the editor. As a prerequisite, JAVA_HOME variable should also be set. Now we need to set SPARK_HOME environment variable and add it to the PATH. Then we moved the spark named folder to /usr/lib/. In the following terminal commands, we copied the contents of the unzipped spark folder to a folder named spark.

How to install spark on ubuntu zip#

To unzip the download, open a terminal and run the tar command from the location of the zip file. Before setting up Apache Spark in the PC, unzip the file.

0 kommentar(er)

0 kommentar(er)